We compared image-only, two-stage, and our stochastic proxy replay training strategies. Image-only training produced superficially smooth but static videos (0.84 dynamic degree). Two-stage training improved dynamics (81.51) but introduced severe artifacts and S2V forgetting. Our alternating strategy excelled, balancing motion smoothness (98.45) and dynamic degree (69.64) without the drawbacks of the other methods.

Our Approach

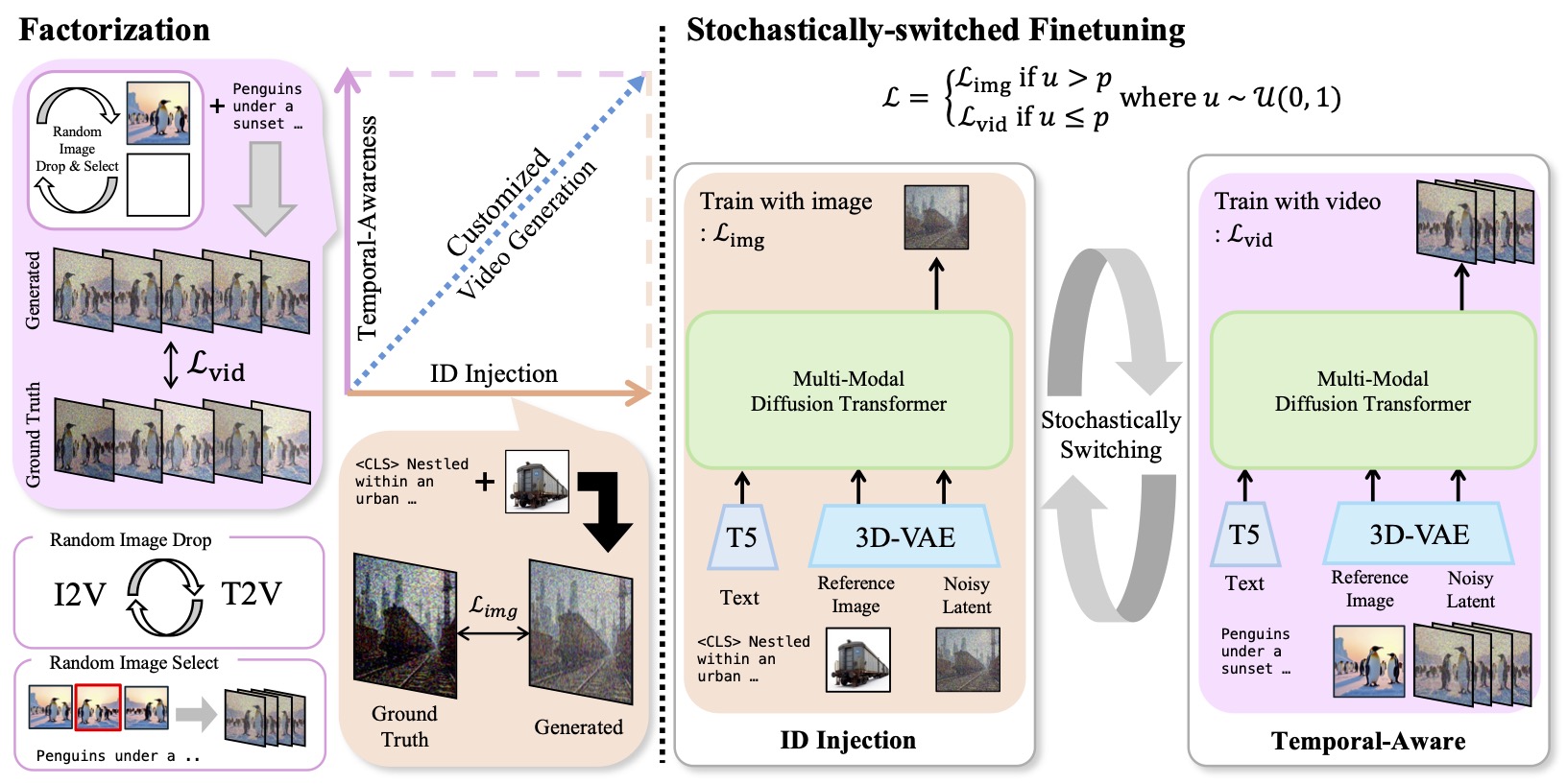

We view subject-driven video generation as a dual-task learning problem with two objectives: Identity injection from S2I pairs to learn subject-specific appearance, and Temporal awareness preservation from generic text-video pairs to maintain motion dynamics.

We optimize these two objectives with a simple stochastic task-switching scheme. At every iteration we draw a random variable and choose between the S2I identity loss and the I2V temporal loss with a mixing probability (p=0.2), meaning 80% of updates come from S2I batches and 20% from proxy videos. To prevent trivial first-frame copying, we introduce random frame selection and image-token dropping during I2V updates.

Our main contributions are:

- We cast subject-driven video customization as a dual-task problem that combines identity injection from subject-to-image pairs with temporal preservation from a small set of unpaired videos, enabling tuning-free S2V without expensive subject-video pairs.

- We introduce a stochastic task-switching scheme with proxy video replay, random frame selection, and image-token dropping, allowing the video model to preserve motion while being fine-tuned mostly on S2I data.

- We analyze the gradients of the identity and temporal objectives in a real video model, showing emergent near-orthogonality under stochastic dual-task updates and achieving strong zero-shot S2V with 288 A100-hours, 4K proxy videos, and as few as 4K S2I pairs.

Data and Compute Comparison

| Method | Dataset Size | Base Model | A100 Hours | Domain |

|---|---|---|---|---|

| Per-subject tuned methods (require fine-tuning for each subject) | ||||

| CustomCrafter | 200 reg. images / subj. | VideoCrafter2 (1.4B) | ~200 / subj. | Object |

| Still-Moving | few images + 40 videos | Lumiere (1.2B) | – | Face/Object |

| Tuning-free methods (zero-shot generalization) | ||||

| VACE | ~53M videos | LTX & Wan (14B) | 70K–210K | General |

| Phantom | 1M subject-video pairs | Wan (1.3–14B) | 10K–30K | Face/Object |

| Consis-ID | 130K clips | CogVideoX (5B) | – | Face |

| Ours | 200K S2I + 4K videos | CogVideoX (5B) | 288 | Object |

Our method achieves 35 to 730× lower training cost than tuning-free baselines while requiring no per-subject fine-tuning at inference.

Comparison with Tuning-free Baselines

Quantitative Evaluation

We evaluate our method on VBench and Open-S2V Benchmark. Despite using only 288 A100 GPU hours for training (35 to 730× less than tuning-free baselines), our method achieves comparable performance across key metrics including motion smoothness, text alignment, and identity preservation.

On VBench, we achieve strong results in dynamic degree and identity consistency. On Open-S2V (single-domain track), we obtain competitive scores without any per-subject fine-tuning.

Human Face Generation

Although our training data primarily consists of general objects, our method can also generate videos with human faces. This demonstrates the generalization capability of our dual-task learning framework.

Gradient Dynamics in Dual-Task Learning

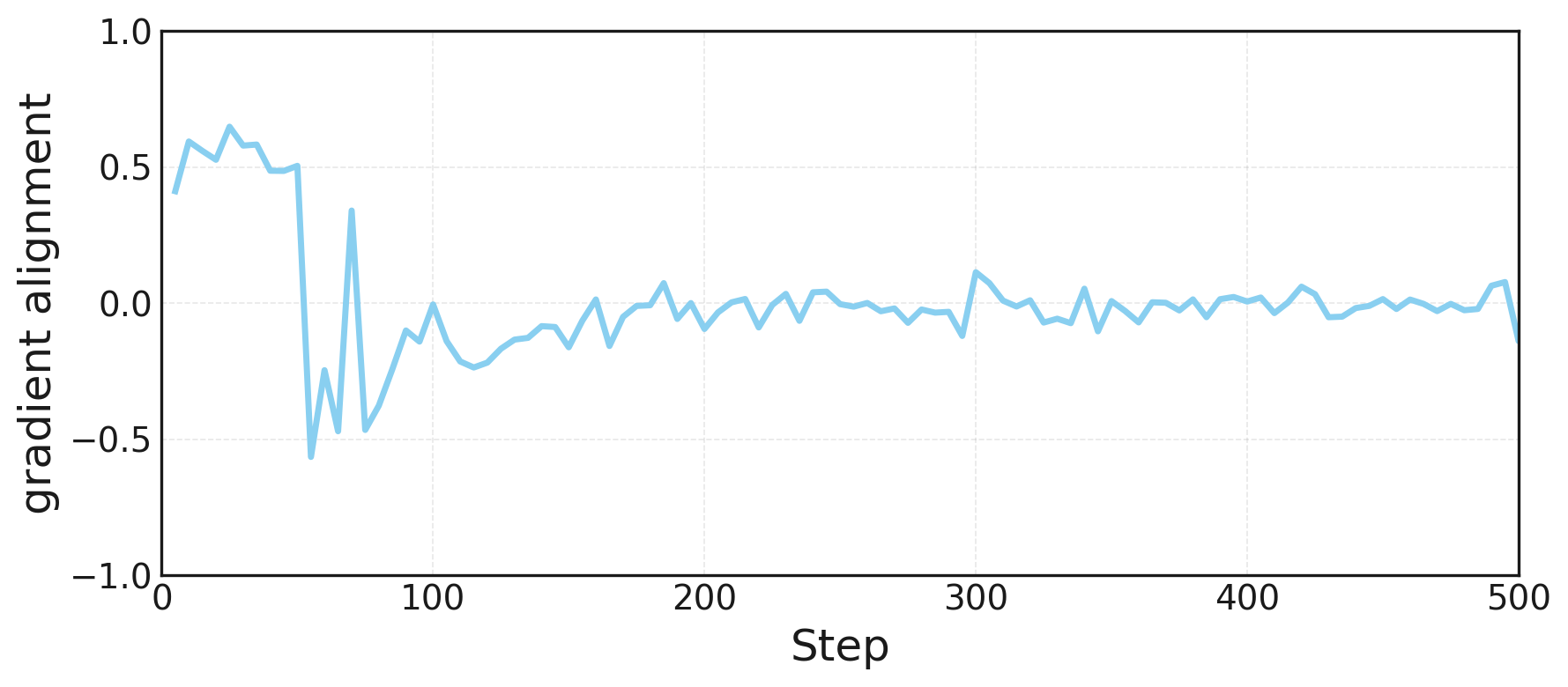

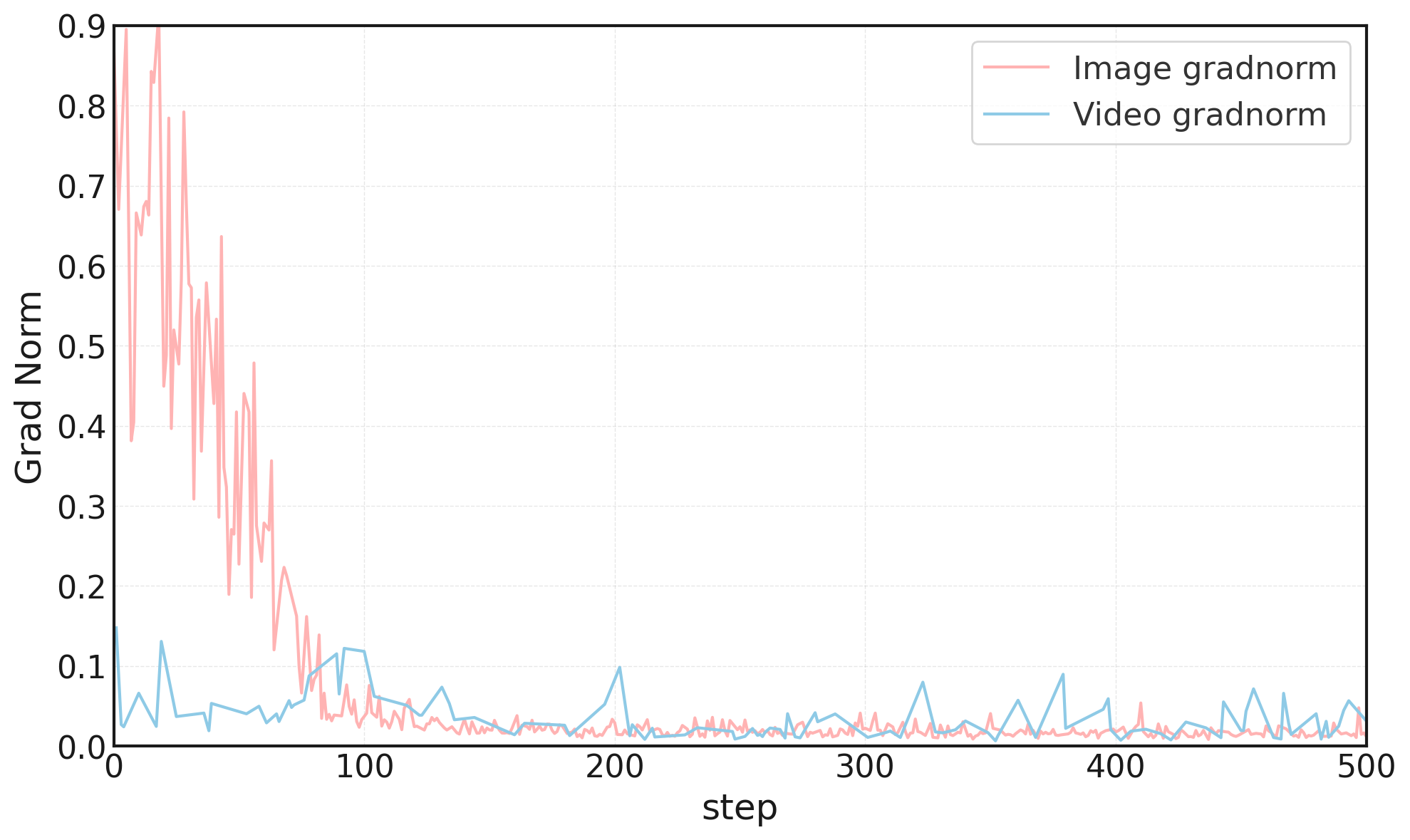

We analyze the gradient dynamics of our dual-task learning framework by measuring the cosine similarity and norms of the identity gradient (gimg) and temporal gradient (gvid) throughout training.

Gradient Conflict

Cosine similarity φ(t) between gimg and gvid rapidly collapses to a narrow band near zero, revealing emergent near-orthogonality between the objectives.

Gradient Norms

Both ∥gimg(t)∥ and ∥gvid(t)∥ remain non-negligible throughout training, indicating that the decay of cosine similarity reflects a genuine change in gradient direction.

This empirical observation supports the hypothesis that, after a short transient, identity injection and temporal preservation occupy nearly orthogonal directions in parameter space. This enables joint optimization without catastrophic interference between identity and motion learning, unlike sequential or single-task approaches.

Ablation Study

Ablation in Training Strategy

We further evaluate temporal modeling performance using the

FloVD

Temporal Evaluation Protocol, categorizing videos by optical flow magnitude (small, medium, large). Our method achieves lower FVD scores than both image-only and two-stage training, confirming superior motion realism and temporal consistency on par with the original CogVideoX.

Details can be found on our paper.

| Method | Small | Medium | Large |

|---|---|---|---|

| CogVideoX-T2V† | 597.54 | 594.26 | 573.86 |

| Image-only | 641.92 | 636.42 | 680.34 |

| Two-stage | 801.97 | 872.30 | 824.03 |

| Ours | 512.30 | 511.66 | 550.14 |

† Pexels-finetuned CogVideoX

Ablation in Temporal-aware Preservation Training

Limitations

While our method achieves strong results with minimal training cost, there are several limitations to acknowledge.

Human Face Generation Failure

Summary of Limitations

- Human face generation: Our training dataset (Subject-200K) primarily comprises general objects and contains few human faces. While our method supports video customization for arbitrary inputs, this may hinder its effectiveness for human-specific personalization, often introducing blurring artifacts that fail to preserve nuanced identity features.

- Theoretical assumptions: The gradient analysis makes simplifying assumptions (local convexity, smoothness) that might not strictly hold for deep diffusion transformers, and requires further analysis in future work.

BibTeX

@article{kim2025subject,

author = {Kim, Daneul and Zhang, Jingxu and Jin, Wonjoon and Cho, Sunghyun and Dai, Qi and Park, Jaesik and Luo, Chong},

title = {Subject-driven Video Generation via Disentangled Identity and Motion},

journal = {arXiv},

year = {2025},

}